Introduction

In our applications, we manage many secrets such as passwords, tokens, certificates and encryption keys, which we don’t want them to be exposed. It is not easy to keep secrets safe. It becomes even more complicated as the organization grows, with new teams, projects, technologies and servers. This increase the probability of breach, especially if we do not pay enough attention to security. An example of an anti-pattern is storing secrets in a configuration file that is then committed to a repository on GitHub. We should aim to minimize the number of people who have access to this sensitive data and ensure that access can be easily revoked when needed.

We will tackle the challenge of securely storing application configurations using HashiCorp Vault, referred to as Vault. It is a tool for secrets management where you can store all kinds of sensitive data mentioned earlier.

We can use it to store our secret data securely but there are also other features supported out-of-the-box:

- Audit log with details about accesses or data changes.

- Data encryption called also “encryption as a service” which allows for data encryption and decryption and then storing this data somewhere else and the complexity related to encryption keys management is outsourced to the Vault.

- Dynamic secrets generating secrets on-demand for other systems like database or AWS.

- Authentication and authorization to control and restrict accesses to secrets by using several different approaches.

The Vault gives a few different interfaces to communicate with it: CLI, UI, HTTP API.

As a warm-up we will playaround for a little while with CLI and UI with Vault in dev mode which has lower entry barrier so we can faster familiarize ourselfs with the basic concepts.

In dev mode a lot of of concerns related to Vault preparation are alleviated, allowing for a smooth start. We dive deeper into these advanced topics later.

For now, the two most important informations are as follow:

- Everything in Vault is path-based, all operations and resources within Vault are accessed and managed through a hierarchical path structure, similar to a filesystem.

- Access to paths are controllerd through policies, and policies are in mode “deny by default” which means you have no right to perform any operation before you have granted permissions.

Usecase

We use key/value (KV) secrets engine to store dotnet app configuration previously stored in appsettings.json file. Secrets written to Vault are encrypted and then written to backend storage. Our apllication will authorize with a Vault and fetch saved configuration to use it later in processes. As mentioned earlier, we start easy with server in dev mode to then jump to more complicated solution to learn more about the Vault features.

Dev mode

We run the Vault as docker container with port 8200 exposed to our host which allows us then open its UI in browser.

docker run --cap-add=IPC_LOCK -d -p 8200:8200 --name=dev-vault hashicorp/vault

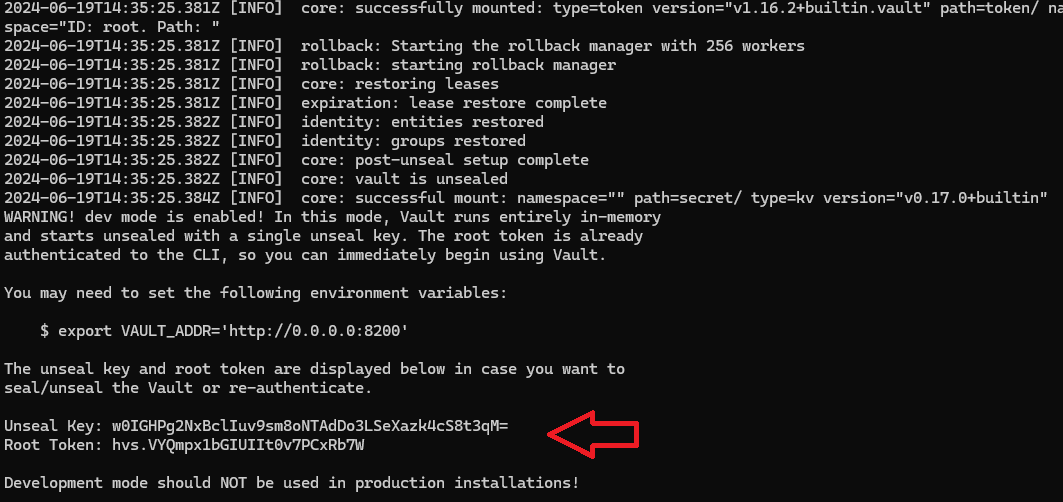

docker logs dev-vault

If you look into the container logs there are some data like root token and unseal key. The first one allow you to login into the Vault management UI so for now you can treat it like a password. More about it will be in subsequent sections.

As mentioned earlier a CLI is also available and you can access the same Vault functionalities like in UI by running shell of the Vault’s container.

docker exec -it dev-vault sh

export VAULT_ADDR='http://0.0.0.0:8200'

export VAULT_TOKEN='<root_token>'

Setting the VAULT_ADDR environment variable is required and it instructs the Vault CLI client on where to find the dev server.

Another variable to set is the VAULT_TOKEN which is root token from logs mentioned earlier, used to authenticate with Vault.

Please remember in next sections every time some commands are listed and command starts with vault keyword it means it’s related to vault running it container. In such case you need to open CLI of Vault, executes commands listed above to be authenticated and be able to excecutes subsequent operations.

Before we start, there is one thing I want to explain. KV store for now has has two versions: v1 and v2. You rather prefer v2 over v1 engine because v2 provides a way to version and rollback secrets. Hopefully, in dev mode the v2 is enabled by default.

There are bunch of commands usefull in context of CLI operations on secret engine listed below which I suggest to familiarize with to be able to test how the Vault works.

vault secrets enable -path=kv1 kv-v2 # creates new KV secret engine with specified path

vault secrets list -detailed # display all secret engines

vault kv put kv1/hello foo=world # save "foo" key with "world" value to path kv1/hello

vault kv patch kv1/hello foo=world2 # updates current value under key

vault kv get kv1/hello # read all entries from the path

vault kv get -mount=kv1 -field=foo hello # read a value from field called "foo"

vault kv get -mount=kv1 -version=2 hello # get entry of certain version

vault kv delete -mount=kv1 hello # delete entry, it will be only marked as deleted - soft delete

vault kv undelete -mount=kv1 -versions=1 hello # if data were deleted by accident you can revert them

Be careful with put command wich replace the current version of secret with values specified in executed command. If you do not pass whole set of data, the newly passed data override all which were set before.

Regarding to get command, if version is not specified explicitly, newest is always returned.

Code example

We are going to prepare solution allowing to store vulnerable app configuration in the Vault and then we will fetch this data to application on startup instead of storing this data in appsetting’s file.

For sake of simplicity we will start with using root token for authentication, which is not recommended in production mode because you can treat it like sudo which means unconstrained privileges. Be very carefull to do not expose this token. Later on we will try to prepare something more in line with security standards.

Example will be based on TodoApp with connection string stored in vault instead of configuration. I will recommend to clone this repository locally because presented terminal commands assumes that you are located in repository root directory. For every part of article a separate git tag will be shared to you be able to see whole solution and test it.

Engine enabling instruction

Solution presented in this part of article can be found under tag vault-dev-basic.

Switch to this tag before you move on because one of the app configuration section have to be used in instruction below.

At this point, for simplicity we don’t need to to create a new engine, we can use already existing one called “secret”. Follow the instruction below to configure it in UI.

- Open secret engines list http://localhost:8200/ui/vault/secrets and log in using root token copied from the container logs.

- A displayed list should contain 2 rows, we want to select this called “secret”.

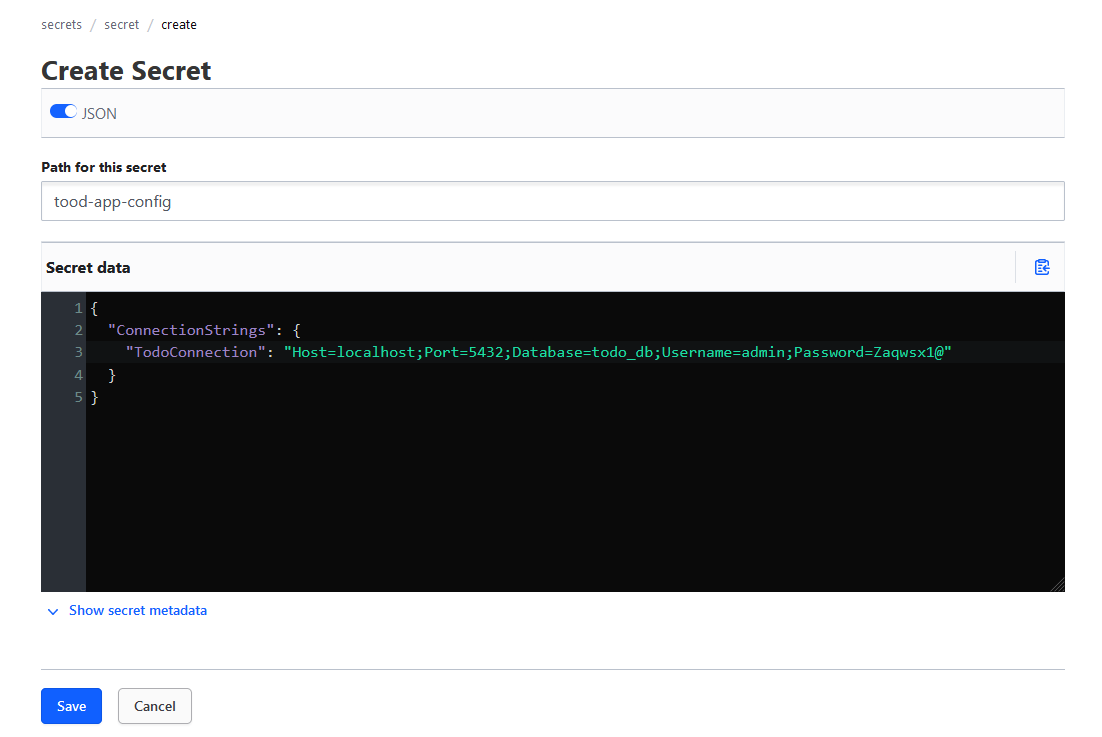

- Click “Create secret” button to add new secrets.

- Fill a form, as a path type

todo-app-config, enable “JSON” option allowing us to copy&paste JSON config from appsettings.Development.json.{ "ConnectionStrings": { "TodoConnection": "Host=localhost;Port=5432;Database=todo_db;Username=admin;Password=Zaqwsx1@" } }In UI it should looks like this:

- Save the changes.

We need to somehow fetch configuration stored in secret engine and put into the application on startup.

Fortunatelly, there already exists Nuget package VaultSharp.Extensions.Configuration which allows to use the Vault as a configuration source in dotnet apps.

When we have it installed we need to specify how to connect to the Vault. We add a new dedicated section in appsettings file with data we used in process of secret creation.

{

"Vault": {

"Url": "http://localhost:8200",

"Token": "<root_token>",

"BasePath": "todo-app-config",

"MountPoint": "secret",

"LoadConfiguration": true

}

}

Then we want to use filled in data to configure the Vault connection so we map them to the object of class VaultConfiguration.

public sealed class VaultConfiguration

{

public VaultConfiguration()

{

}

public string Url { get; set; }

public string Token { get; set; }

public string BasePath { get; set; }

public string MountPoint { get; set; }

public bool LoadConfiguration { get; set; }

}

To follow standard approach in dotnet world we create a new extension method encapsulating logic for the Vault setup.

public static class VaultSetup

{

public static IServiceCollection AddVault(

this IServiceCollection services,

IConfigurationBuilder configurationBuilder,

string configurationSectionName = "Vault")

{

if (string.IsNullOrWhiteSpace(configurationSectionName))

{

throw new MissingVaultConfigurationException(configurationSectionName);

}

var vaultConfig = GetSection<VaultConfiguration>(configurationBuilder.Build(), configurationSectionName);

if (vaultConfig.LoadConfiguration)

{

AbstractAuthMethodInfo authMethod;

var authMethod = new TokenAuthMethodInfo(vaultConfig.Token);

configurationBuilder.AddVaultConfiguration(

() => new VaultOptions(

vaultConfig.Url,

authMethod,

reloadOnChange: vaultConfig.ConfigRefreshEnabled,

reloadCheckIntervalSeconds: vaultConfig.ConfigRefreshInterval),

vaultConfig.BasePath,

mountPoint: vaultConfig.MountPoint

);

if (vaultConfig.ConfigRefreshEnabled)

{

services.AddHostedService<VaultChangeWatcher>();

}

}

return services;

}

private static T GetSection<T>(IConfiguration configuration, string sectionName) where T : class, new()

{

var section = configuration.GetRequiredSection(sectionName);

var model = new T();

section.Bind(model);

return model;

}

}

First we need to map settings section to an object of class prepared earlier.

Currently, we select authorization method with with root token by using TokenAuthMethodInfo class.

To extension method AddVaultConfiguration available in the installed nuget package, we pass connection details and location of our secrets.

The problem with the presented solution is that we are using a root token, which is insecure because, in the event of a breach, someone could gain unrestricted access to all data in the Vault. Moreover the documentation advise to do not use root token in production deployment.

The second issue is that every time we restart the Docker container, all saved changes are lost, so we have to set up Vault from scratch each time.

If you switched to git tag vault-dev-basic, now you can run app and test if all works fine.

Remember to replace root token in configuration section Vault:Token with token generated in your Vault container.

cd ./infrastructure

docker-compose up -d # Run database container

cd ./../src/SimpleTodo

dotnet run

To be sure that connection string is taken from Vault you can leave configuration section ConnectionStrings:TodoConnection empty and run the application again.

Use predefined requests from api.http file or use Swagger to test if new entities are added and fetched properly from database.

Persistent setup

Our goal is to store secrets persistently. Additionally we want to use authorization method giving our app minimal privileges which allows to work correctly, what will be kind of defense against privilege-escalation attacks.

This time we will use docker compose file for setup.

services:

vault:

image: hashicorp/vault:1.16

container_name: vault

ports:

- "8200:8200"

environment:

VAULT_LOCAL_CONFIG: '{"storage": {"file": {"path": "/vault/data"}}, "listener": [{"tcp": { "address": "0.0.0.0:8200", "tls_disable": true}}], "api_addr": "http://0.0.0.0:8200", "default_lease_ttl": "168h", "max_lease_ttl": "720h", "ui": true}' # will be saved in local.json file in mounted directory, config can be added directly in mounted directory's file

cap_add:

- IPC_LOCK

volumes:

- ./docker/vault/data:/vault/file

- ./docker/vault/config:/vault/config

- ./docker/vault/logs:/vault/logs

command: server

networks:

- simple_todo

We map to host port 8200 to have Vault again avalable on it.

Config is passed in environment variable, alternative option is to put this config in JSON local.json file in the linked config’s volume.

We don’t want to hassle with certificate so it is marked as not required. UI is enabled to allow us prepare the Vault.

Volumes are link to store data persistently even when container is restarted.

Now, you can run container but let’s switch to a new git tag vault-app-role.

cd infrastructure

docker-compose up -d

When you open up UI in browser it will ask you to set up the initial set of root keys and these keys allows you then unseal the Vault. We are going to go through this process using CLI.

Unsealing

Secrets are decrypted when stored in the Vault. As documentation says “unsealing is the process of obtaining the plaintext root key necessary to read the decryption key to decrypt the data, allowing access to the Vault”. The Vault to be able to obtain root key, we need to provide it an unseal key. The unseal key is actualy a group of shares arising as a result of using Shamir’s secret sharing algorithm splittting the key into these shares. You need to provide certain threshold of shares which allows to reconstruct unseal key. To increase security you can distribute shares among multiple parties so it should be harder for an attacker to obtain a group of them allowing to gain access.

More details in seal section of official documentation.

Follow commands below to unseal the Vault.

docker exec -it vault sh

export VAULT_ADDR='http://0.0.0.0:8200'

vault operator init # generates unseal and root key

The last commands generates root token and 5 shares of unseal key. Copy and store them securely, we will use it in a moment in unseal process. To unseal you have to pass 3 out of 5 shares as stated in message in terminal.

vault operator unseal

Execute these command at least 3 times, every time passing other share of unseal key. Shares order does not matter.

The procedure of initialisation is executed only once when a new backend for the Vault is started. But after every restart of Vault, unsealing is required.

Then open (or refresh) UI http://localhost:8200 and use root token to log in.

Creating a new secrets engine

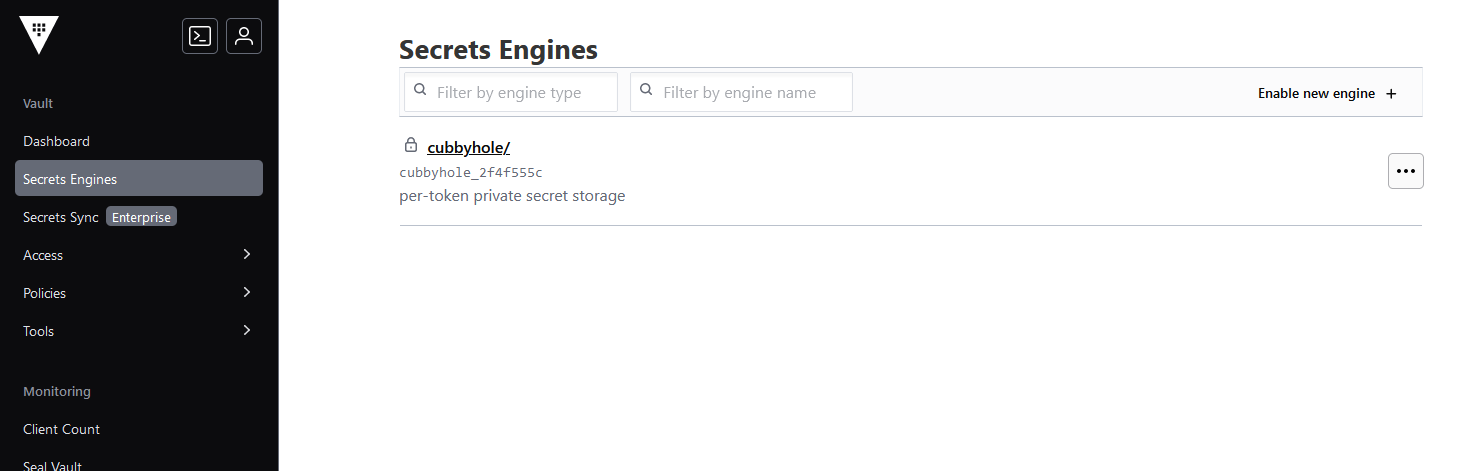

In first approach we have used already existing engine. This time we will start with enabling new secret engine.

- Open Secret Engines page.

- Select KV option.

- Fill in Path input with value

app-configsas in prior example. - Confirm engine enabling.

Now you can follow the same instruction like in Engine enabling instruction section to store app configuration in the Vault secret engine. Start from 3rd instruction point.

Access

The goal is give our application only as few rights as possible.

It only needs to read the saved configuration.

We need to specify policy which provide a declarative way to grant or forbid access to certain paths and operations in Vault.

In “Policies” tab we create new policy which specify these access rights a’ka capabilities.

Create new policy called todo-app-config-access with content specified below.

path "app-configs/*" {

capabilities = ["list"]

}

path "app-configs/data/todo-app-config" {

capabilities = [ "read", "list" ]

}

In introduction’s section I’ve mentioned that everything in the Vault is path-based so the same applies to policies. When the app authenticate to elicit an token, this policy will be attached to it and only specified capabilities will be allowed. In this example we specified that only read and list capabilities are allowed so the app cannot make any modifications of stored secrets.

New app authentication method

Previously token was used as authentication method. For machines or apps one of the recommended methods is AppRole. In these approach we need to have role id and secret id which is kind of user and password from standard human-oriented flow. Using these pair we can lease a token allowing then the app to authorize.

export VAULT_TOKEN='<root_token>'

# Enable the AppRole auth method

vault auth enable approle

# Create a role

vault write auth/approle/role/todo-app \

token_ttl=2m \

token_max_ttl=10m \

token_policies=todo-app-config-access

# Fetch role id for previously created role

vault read auth/approle/role/todo-app/role-id

# Fetch secret id for previously created rol

vault write -f auth/approle/role/todo-app/secret-id

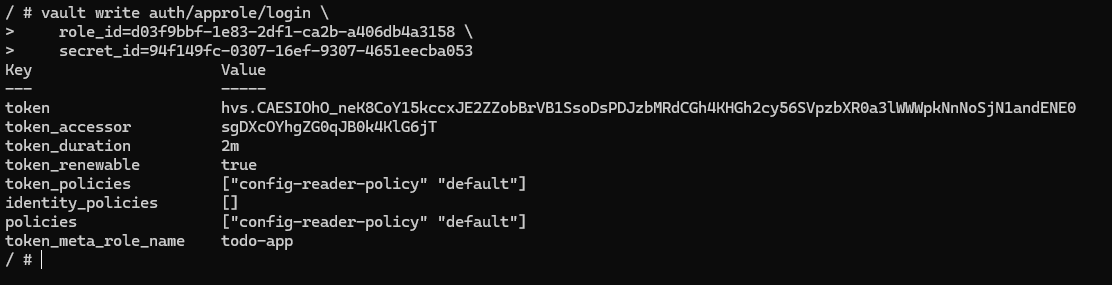

# Fill parameters with values returned from previous commands and verify if you can lease a new token

vault write auth/approle/login \

role_id=<role_id> \

secret_id=<secret_id>

If you execute all of these given commands in Vault CLI, a new token should be leased.

To be able to present app role auth method, in this article I decided to simplify the case and generate both ids upfront. As you see in second command listed above, the token will be short-living. Two minutes should allow to startup the application, fetch secrets from vault and in this case we no longer need these token.

Recommended scenario is to use AppRole is such way that you can deliver these ids value separately through two different channels. A role should be created per application to ensure that each application will have a unique role id. Its value is not a secret and can be even stitched inside app config or set as environment variable.

More attention should be payed to secret id which might be delivered to app in deployment pipeline. In simple scenarion e.g. Jenkins fetch wrapped secret id from Vault, the application unwrap the data and use it to lease token. The wrapping helps to protect against exposing vulnerable data during transit. Fetched data are wrapped, single-use token is generated and should be passed to eligible party which use it to unwrap original data. If it cannot unwrap data because token was already used it means that probably breach happend and we should react on it following our procedure prepared for such situation.

New auth method in code

Two new fields AppRoleId and AppRoleSecretId have to be added to configuration class.

public sealed class VaultConfiguration

{

// rest of code ommited for simplicity

public string AppRoleId { get; set; }

public string AppRoleSecretId { get; set; }

}

We use this fields in extension method and decides which auth method to use.

public static class VaultSetup

{

public static IServiceCollection AddVault(

this IServiceCollection services,

IConfigurationBuilder configurationBuilder,

string configurationSectionName = "Vault")

{

// rest of the code omitted for brevity

AbstractAuthMethodInfo authMethod;

if (string.IsNullOrWhiteSpace(vaultConfig.Token))

{

authMethod = new AppRoleAuthMethodInfo(vaultConfig.AppRoleId, vaultConfig.AppRoleSecretId);

}

else

{

authMethod = new TokenAuthMethodInfo(vaultConfig.Token);

}

// ...

}

}

Now if you leave token’s config field empty the AppRole authentication method will be applied. Ideally it should be injected in pipeline instead of stitch them in code.

{

"Vault": {

"Url": "http://localhost:8200",

"Token": "",

"BasePath": "todo-app-config",

"MountPoint": "app-configs",

"AppRoleId": "<app_role_id>",

"AppRoleSecretId": "<app_role_secret_id>",

"LoadConfiguration": true

}

}

If you followed all steps the app should be able to run as with previous approach using token but this time using other authentication method.

Dynamic secrets

What if we can generate access to database on demand, for limited range of time and with only such privileges as needed - no less no more. Up to now we assumed that we have prepare connection string upfront which we put into the KV store ourself and then fetch it from there on startup. Now we want to generate database connection string on demand, when is needed so we reduce a risk that someone would steal it and additionally we know it is only used by this one app which requested it. At the end when no more needed we can revoke it.

The app use db schema named “todo” so first we need to create it.

To see a full code, switch to git tag vault-dynamic-secrets.

cd ./infrastructure

docker-compose up -d

Commands listed below execute only if you do not have this database schema created. If you run the app previously the schema should be already created and you can skip executing these commands.

docker exec -it postgres_todo bash

psql -U admin -d todo_db

CREATE SCHEMA todo;

Now when schema exists we prepare database dynamically created secret configuration. We use database credentials defined in docker compose file.

# Enable the database secrets engine if it is not already enabled

vault secrets enable database

# Configure Vault with the proper plugin and connection information

vault write database/config/todo-postgresql \

plugin_name=postgresql-database-plugin \

connection_url="postgresql://{{username}}:{{password}}@postgres_todo:5432/todo_db?sslmode=disable" \

allowed_roles=todo-app-role \

username="admin" \

password="Zaqwsx1@"

# Configure a role that maps a name in Vault to an SQL statement to execute to create the database credential

vault write database/roles/todo-app-role db_name="todo-postgresql" \

creation_statements="

CREATE ROLE \"{{name}}\" WITH LOGIN PASSWORD '{{password}}' VALID UNTIL '{{expiration}}';

GRANT USAGE ON SCHEMA todo TO \"{{name}}\";

GRANT CREATE ON SCHEMA todo TO \"{{name}}\";

GRANT SELECT ON ALL TABLES IN SCHEMA todo TO \"{{name}}\";" \

default_ttl="1h" \

max_ttl="24h"

# Generate a new credential for test if works by reading from the /creds endpoint with the name of the role

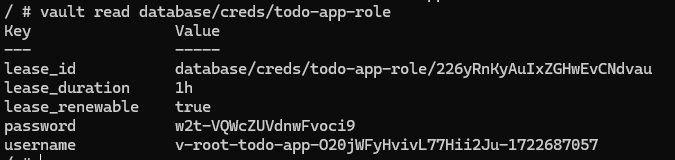

vault read database/creds/todo-app-role

Using those commands we define that we want to allow generate credentials on demand. When new credentials are requested a role with privileges to create tables, insert and fetch data is assigned to newly generated user. These credentials are returned to the app to use them later in communication with database.

Privileges to create tables is required because SimpleTodo app use EF Core and it’s migrations are executed on app startup.

When we generate privileges a new lease is created. Lease a concept containing information about time duration of generated credentials. When time is up the data can no longer be valid because Vault can automatically revoke the data. We as the consumer of the data need to supervise if lease is still valid. We have an option to renew lease before it expires. It may not be possible to renew a lease because maximum TTL is exceeded. In this scenario we can generate totally new credentials with new lease and renew it until we hit maximum TTL again. In situation we no longer want to use credentials we can revoke a lease it is invalidated immediately and prevents againts any further renewals.

We want put our app in charge of renewing lease or generating new credentials.

First thing we need to do is to extend privileges in previously created policy called todo-app-config-access, for that, paste code listed below right after already added privileges.

path "database/creds/todo-app-role" {

capabilities = [ "read", "list" ]

}

path "sys/leases/renew" {

capabilities = [ "create" ]

}

path "sys/leases/revoke" {

capabilities = [ "update" ]

}

Before we implement a mechanism to renew leases, we want to generate credentials on startup to allow the app use them to execute migrations and then connect to database in application-specific domain logic.

public static async Task<IServiceCollection> AddVault(

this IServiceCollection services,

IConfigurationBuilder configurationBuilder,

string configurationSectionName = "Vault")

{

// ...

var vaultAccessor = new VaultAccessor(vaultConfig);

services.AddSingleton(vaultAccessor);

// ...

await services.GenerateDynamicCredentials(configurationBuilder, vaultConfig, vaultAccessor);

// ...

}

private static async Task GenerateDynamicCredentials(

this IServiceCollection services,

IConfigurationBuilder configurationBuilder,

VaultConfiguration vaultConfig,

VaultAccessor vaultAccessor)

{

var leasesStore = new LeasesStore();

services.AddSingleton(leasesStore);

services.AddHostedService<LeasesRenewalScheduler>();

var dynamicCredentialsConfigInitialData = new List<KeyValuePair<string, string?>>();

foreach (var dynamicCredentials in vaultConfig.DynamicCredentials)

{

var result = await vaultAccessor.GenerateDatabaseCredentials(dynamicCredentials.RoleName, dynamicCredentials.MountPoint);

await leasesStore.AddNewLease(

new Lease(

result.LeaseId,

TimeSpan.FromSeconds(result.LeaseDurationSeconds),

dynamicCredentials.ConfigSectionToReplace));

var replacedSetting = dynamicCredentials

.ValueTemplate

.Replace("", result.Data.Username)

.Replace("", result.Data.Password);

// For debugging purposes only

Console.WriteLine("Generated credentials: user={0} | password={1} | lease_id={2}", result.Data.Username, result.Data.Password, result.LeaseId);

dynamicCredentialsConfigInitialData

.Add(new KeyValuePair<string, string?>(dynamicCredentials.ConfigSectionToReplace, replacedSetting));

}

var source = new DynamicCredentialsConfigurationSource { InitialData = dynamicCredentialsConfigInitialData };

configurationBuilder.Sources.Add(source);

}

Some logic related strictly to communication with Vault API was moved to VaultAccessor which act as an adapter which shares simplified interface in communication with API.

To store information about avaialable lease a LeasesStore was created. It allows us to fetch leases metadata in LeasesRenewalScheduler which takes care of watching leases state and reacting according to lease duration time.

Previous configuration model was extended by adding DynamicCredentialsEntry list which credentials generate and in which configuration section put this data.

public sealed class VaultConfiguration

{

// ...

public ICollection<DynamicCredentialsEntry> DynamicCredentials { get; set; }

}

{

"Vault": {

"DynamicCredentials": [

{

"ConfigSectionToReplace": "ConnectionStrings:TodoConnection",

"ValueTemplate": "Host=localhost;Port=5432;Database=todo_db;Username=;Password=",

"MountPoint": "database",

"RoleName": "todo-app-role",

"AutoRenewal": true

}

]

},

}

With newly generate user and password we replace specified template in field ValueTemplate which is connection string and put this connection string and replace it in section ConnectionStrings:TodoConnection which then is used by EF Core to connect in DbContext.

Besides the actions executed on app startup which generates initial credentials, rest of the flow is implemented in LeasesRenewalScheduler class which is responsible for renewing leases and generating a new credentials when existing leases can no longer be renewed.

I don’t want to analyze code of this class step by step because is quite similar to previous code snippets.

One part of code can be less obvious where custom configuration provider is used to allow to replace app configuration in runtime.

public class DynamicCredentialsProvider : ConfigurationProvider

{

private readonly DynamicCredentialsConfigurationSource _source;

public DynamicCredentialsProvider(DynamicCredentialsConfigurationSource source)

{

_source = source;

if (_source.InitialData != null)

{

foreach (KeyValuePair<string, string?> pair in _source.InitialData)

{

Data.Add(pair.Key, pair.Value);

}

}

}

public void UpdateSettings(KeyValuePair<string, string> settings)

{

Data[settings.Key] = settings.Value;

OnReload();

}

}

This provider is inspired by build-in MemoryConfigurationProvider provider and contains custom method UpdateSettings where generated credentials are passed and replace specified existing configuration section.

When changed OnReload method is invoked so places where e.g. IOptionsMonitor<> is used will be informed that configuration changed.

Feel free to check whole solution in SimpleTodo repository.